If mc.cores is not defined, it will run with the default number of cores available. mc.cores define the number of cores that need to be used for running the function block. Here scrape_page function will be called for each URL in the urls vector. We assign the number of cores to be used to mc.cores params.

It also accepts the number of cores that need to be used. It has a function called mclapply(), which takes a vector as its first argument the second argument is a function. To overcome this, we use the help of the parallel library.

#Webscraper tutorial code

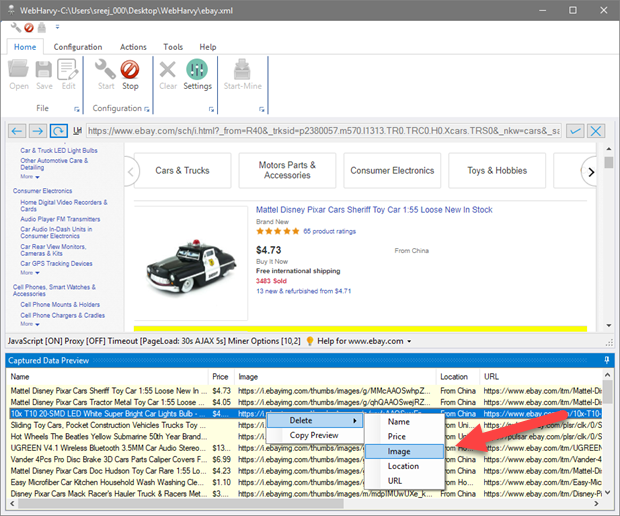

Since there are many product URLs, and each request takes a few seconds to collect the response through the network, the code block will wait for the response to execute the remaining code, leading to a much greater execution time. Let’s send the request to the product pages. Once all the paginations are done, we send the request to the product URLs using the httr library. We paginate through the listing page and add the product URLs to the same list. Now we have all the product URLs and save them into a list. Now we use the help of html_attr from the r-vest library to collect the data. So the XPATH page-numbers"] becomes page-numbers"]). Since there are two results for the same xpath and we want to select the first result to get the next page URL from the ‘a’ node, we give the xpath inside a bracket () and index it. Similarly, we can get the next page url from the next button in HTML. It also helps us to handle the responses received from the website. The httr library allows the R program to send HTTP requests. We use the help of httr library to collect data from the websites. Library('parallel') Send request to the website Save these collected data to a CSV fileįirst, we need to import the required libraries.Visit each product page and collect the following data.Navigate through the first 5 listing pages and collect all product urls.The workflow of the scraper is mentioned below: Install.packages("parallel") Create our first R scraper

#Webscraper tutorial install

Similarly, we can install the packages rvest and parallel install.packages("rvest") The above code installs the package httr. Note: To launch the R console, go to the terminal and type R or R.exe (for Windows OS). For that, launch the R console and run the following command. In order to implement web scraping in R, we need the following libraries :

To check the version, run the command r -version Install required libraries To install R in Linux, run the following commands in the terminal.

The R has many libraries for data analysis and statistical modeling, which can be used for analyzing the collected data. But R has got certain advantages when it comes to manipulating the extracted data. Why R?Īlmost all programming languages can be used to create a web scraper.

#Webscraper tutorial how to

This article will teach how to implement a web scraper in R. We can collect the data from the web page using web scrapers. To create meaningful inferences, we need all these data together. Data is everything in today’s digital age, and we come across immense amounts of publicly available data on the internet.

0 kommentar(er)

0 kommentar(er)